Synchronous multi region (3+ regions)

For protection in the event of the failure of an entire cloud region, you can deploy YugabyteDB across multiple regions with a synchronously replicated multi-region cluster. In a synchronized multi-region cluster, a minimum of 3 nodes are replicated across 3 regions with a replication factor (RF) of 3. In the event of a region failure, the database cluster continues to serve data requests from the remaining regions. YugabyteDB automatically performs a failover to the nodes in the other two regions, and the tablets being failed over are evenly distributed across the two remaining regions.

This deployment provides the following advantages:

- Resilience. Putting cluster nodes in different regions provides a higher degree of failure independence.

- Consistency. All writes are synchronously replicated. Transactions are globally consistent.

Create a Replicate across regions cluster

Before you can create a multi-region cluster in YugabyteDB Aeon, you need to add your billing profile and payment method, or you can request a free trial.

To create a multi-region cluster with synchronous replication, refer to Replicate across regions. For best results, set up your environment as follows:

- Each region in a multi-region cluster must be deployed in a VPC. In AWS, this means creating a VPC for each region. In GCP, make sure your VPC includes the regions where you want to deploy the cluster. You need to create the VPCs before you deploy the cluster.

- Set up a peering connection to an application VPC where you can host the YB Workload Simulator application. If your cluster is deployed in AWS (that is, has a separate VPC for each region), peer the application VPC with each cluster VPC.

- Copy the YB Workload Simulator application to the peered VPC and run it from there.

YB Workload Simulator uses the YugabyteDB JDBC Smart Driver. You can run the application from your computer by enabling Public Access on the cluster, but to use the load balancing features of the driver, an application must be deployed in a VPC that has been peered with the cluster VPC. For more information, refer to Using smart drivers with YugabyteDB Aeon.

Start a workload

Follow the setup instructions to install the YB Workload Simulator application.

Configure the smart driver

The YugabyteDB JDBC Smart Driver performs uniform load balancing by default, meaning it uniformly distributes application connections across all the nodes in the cluster. However, in a multi-region cluster, it's more efficient to target regions closest to your application.

If you are running the workload simulator from a peered VPC, you can configure the smart driver with topology load balancing to limit connections to the closest region.

To turn on topology load balancing, start the application as usual, adding the following flag:

-Dspring.datasource.hikari.data-source-properties.topologyKeys=<cloud.region.zone>

Where cloud.region.zone is the location of the cluster region where your application is hosted.

After you are connected, start a workload.

View cluster activity

To verify that the application is running correctly, navigate to the application UI at http://localhost:8080/ to view the cluster network diagram and Latency and Throughput charts for the running workload.

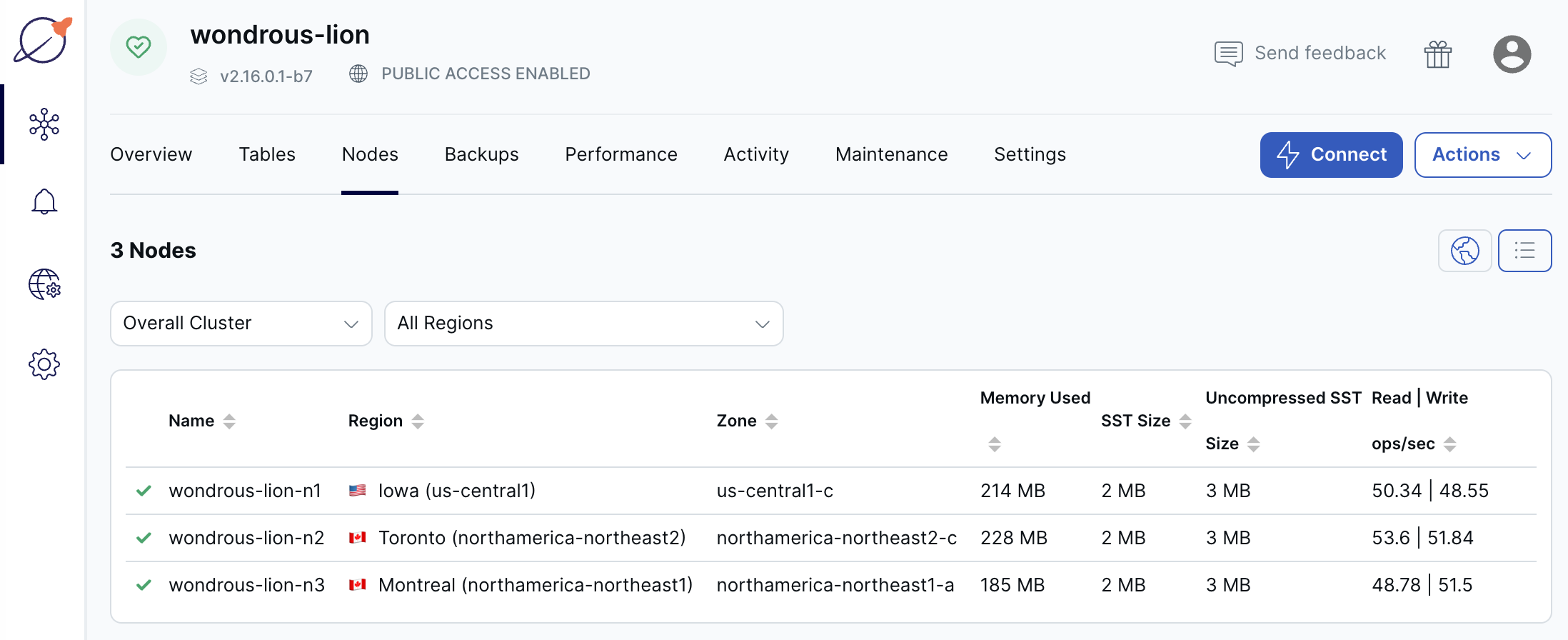

To view a table of per-node statistics for the cluster, in YugabyteDB Aeon, do the following:

-

On the Clusters page, select the cluster.

-

Select Nodes to view the total read and write operations for each node as shown in the following illustration.

Note that read/write operations are roughly the same across all the nodes, indicating uniform load across the nodes.

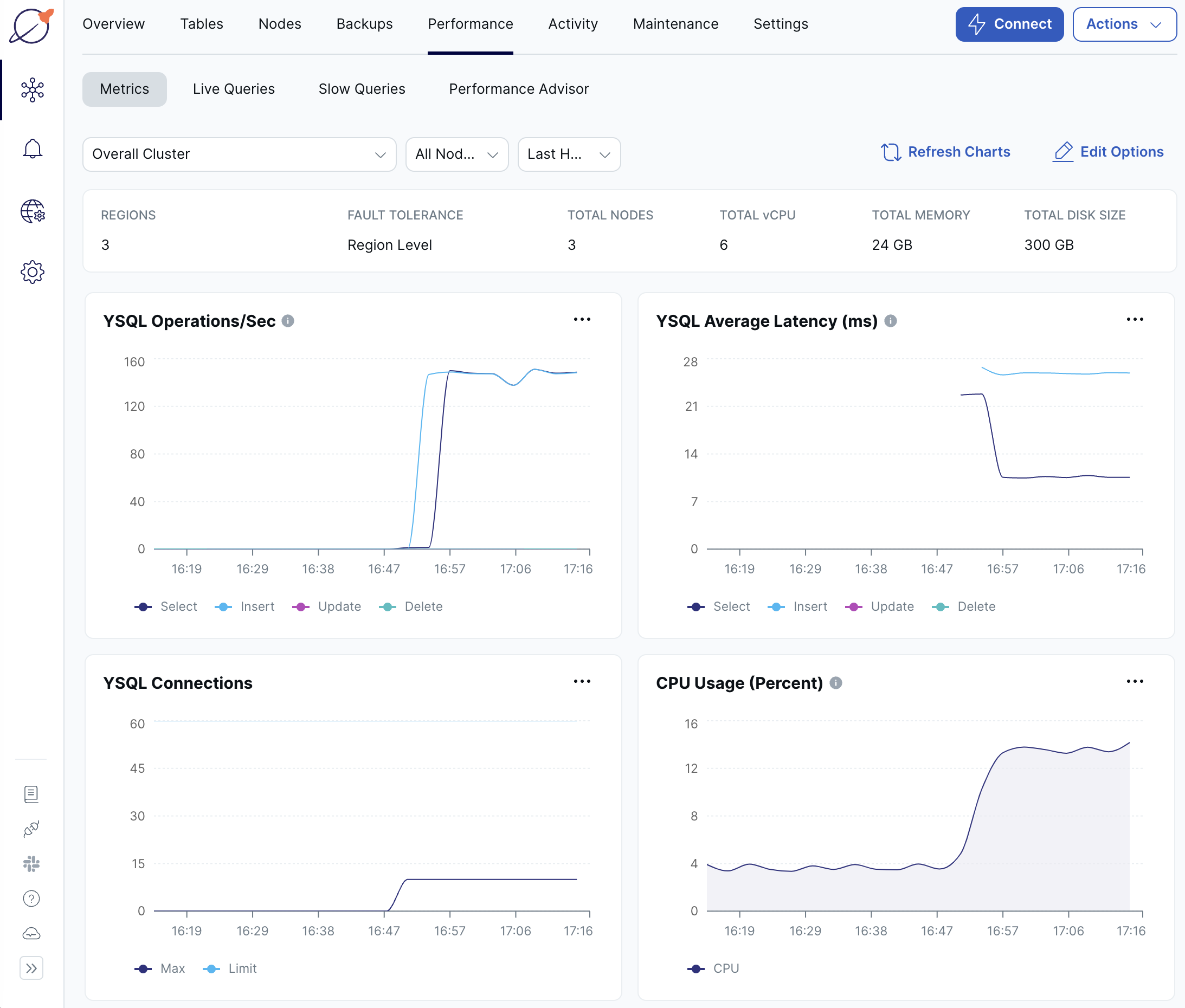

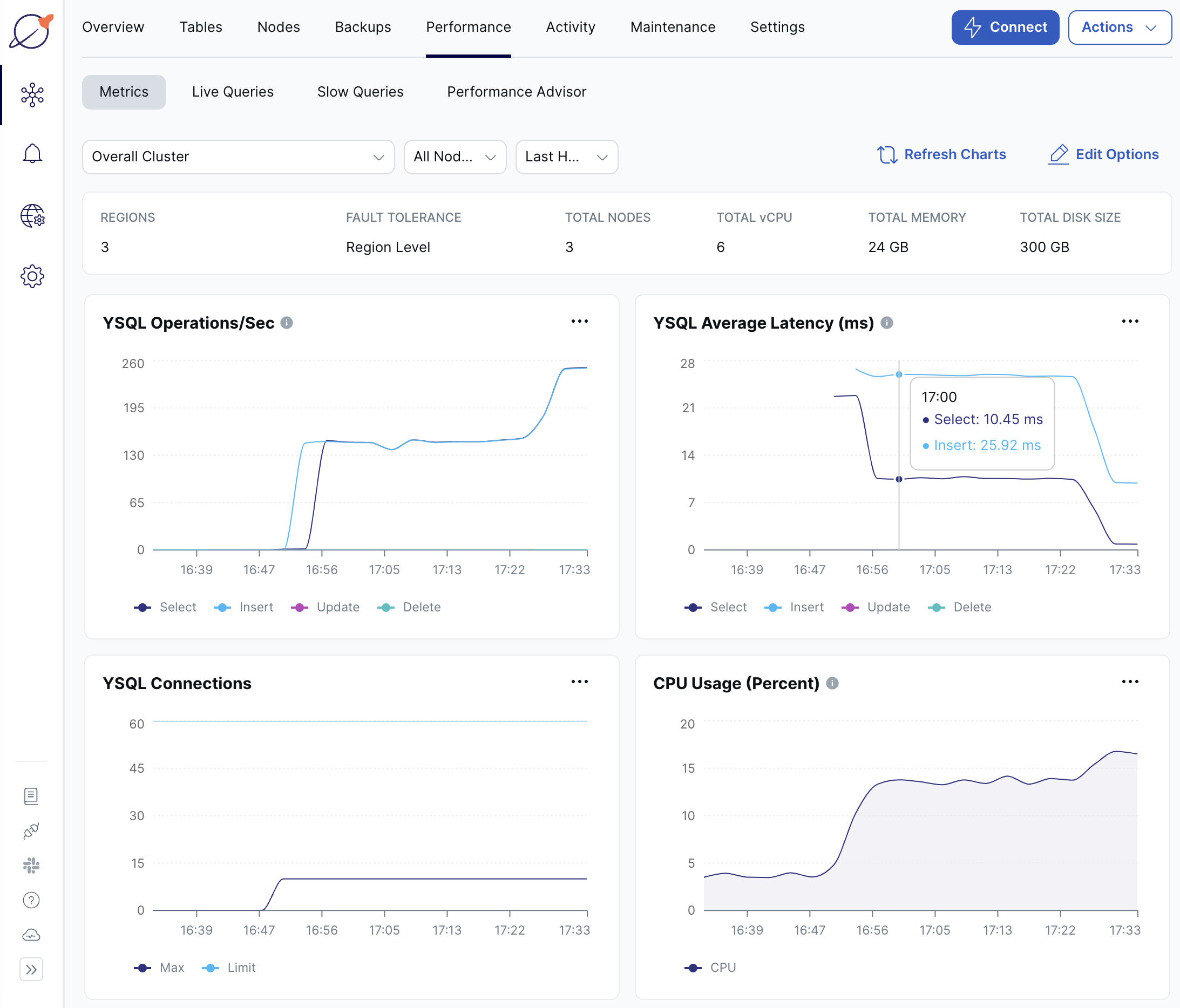

To view your cluster metrics such as YSQL Operations/Second and YSQL Average Latency, in YugabyteDB Aeon, select the cluster Performance tab. You should see similar charts as shown in the following illustration:

Tuning latencies

Latency in a multi-region cluster depends on the distance and network packet transfer times between the nodes of the cluster and between the cluster and the client. Because the tablet leader replicates write operations across a majority of tablet peers before sending a response to the client, all writes involve cross-region communication between tablet peers.

For best performance as well as lower data transfer costs, you want to minimize transfers between providers, and between provider regions. You do this by locating your cluster as close to your applications as possible:

- Use the same cloud provider as your application.

- Locate your cluster in the same region as your application.

- Peer your cluster with the VPC hosting your application.

Follower reads

YugabyteDB offers tunable global reads that allow read requests to trade off some consistency for lower read latency. By default, read requests in a YugabyteDB cluster are handled by the leader of the Raft group associated with the target tablet to ensure strong consistency. In situations where you are willing to sacrifice some consistency in favor of lower latency, you can choose to read from a tablet follower that is closer to the client rather than from the leader. YugabyteDB also allows you to specify the maximum staleness of data when reading from tablet followers.

For more information on follower reads, refer to the Follower reads example.

Preferred region

If application reads and writes are known to be originating primarily from a single region, you can designate a preferred region, which pins the tablet leaders to that single region. As a result, the preferred region handles all read and write requests from clients. Non-preferred regions are used only for hosting tablet follower replicas.

For multi-row or multi-table transactional operations, colocating the leaders in a single zone or region can help reduce the number of cross-region network hops involved in executing a transaction.

Set the region you are connected to as preferred as follows:

-

On the cluster Settings tab or under Actions, choose Edit Infrastructure to display the Edit Infrastructure dialog.

-

For the region that your application is connected to, choose Set as preferred region for reads and writes.

-

Click Confirm and Save Changes when you are done.

The operation can take several minutes, during which time some cluster operations are not available.

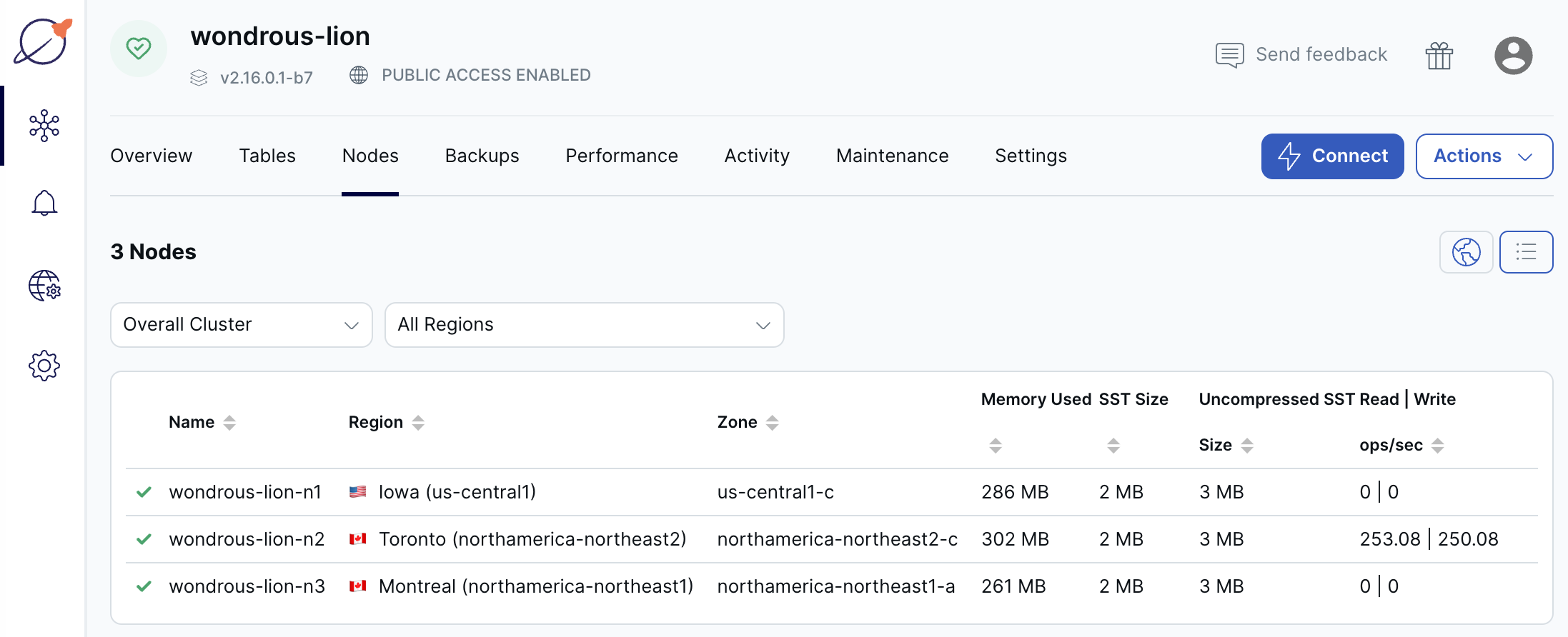

Verify that the load is moving to the preferred region on the Nodes tab.

When complete, the load is handled exclusively by the preferred region.

With the tablet leaders now all located in the region to which the application is connected, latencies decrease and throughput increases.

Note that cross-region latencies are unavoidable in the write path, given the need to ensure region-level automatic failover and repair.